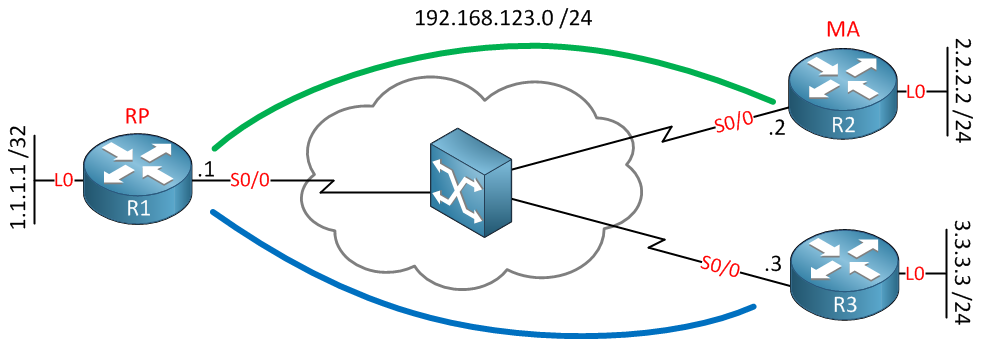

Multicast itself can be tricky enough but the real fun starts when you try to run it over a network like frame-relay. One of the scenarios you can encounter is having a mapping agent behind one of the spokes, you will discover that only the hub router will receive the auto-rp information from the mapping agent, not the other spokes. Let me show you an example what will go wrong and how to fix it. This is the topology we’ll be using:

Above you see a frame-relay network with a hub (R1) and two spokes (R2 and R3). This network has been configured as frame-relay point-to-multipoint. Our routers will believe that we have full reachability but in reality there are only two PVCs, this is a classical hub and spoke topology. Each router has a loopback0 interface which is advertised in OSPF for reachability.

R1 will be configured as our RP (Rendezvous Point) and R2 will be our mapping agent. In this scenario you will see that R3 will never receive the information from the mapping agent and won’t learn that R1 is the RP. Here is our configuration:

R1(config)#ip multicast-routing R2(config)#ip multicast-routing R3(config)#ip multicast-routing

First of all we will enable multicast routing on all routers, if you forget this multicast won’t work at all…

Since I am using some loopback interfaces, we’ll need some routing. I will use EIGRP to advertise all interfaces:

R1(config)#router eigrp 1

R1(config-router)#no auto-summary

R1(config-router)#network 192.168.123.0

R1(config-router)#network 1.0.0.0R2(config)#router eigrp 1

R2(config-router)#no auto-summary

R2(config-router)#network 2.0.0.0

R2(config-router)#network 192.168.123.0R3(config)#router eigrp 1

R3(config-router)#no auto-summary

R3(config-router)#network 192.168.123.0

R3(config-router)#network 3.0.0.0R1(config)#interface serial 0/0

R1(config-if)#ip pim sparse-dense-mode R2(config)#interface serial 0/0

R2(config-if)#ip pim sparse-dense-mode R3(config)#interface serial 0/0

R3(config-if)#ip pim sparse-dense-mode

On all routers we will enable sparse-dense mode. This means that we will use sparse mode if possible, if there is no RP we will use dense mode. This means that the multicast groups 224.1.0.39 and 224.1.0.40 will be forwarded with dense mode. Keep in mind that 224.1.0.40 is used by mapping agents to forward the RP-to-group information to other routers.

R1#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

S - State Refresh Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

192.168.123.3 Serial0/0 00:02:10/00:01:32 v2 1 / DR S

192.168.123.2 Serial0/0 00:02:16/00:01:28 v2 1 / S

A quick check doesn’t hurt…on R1 we can see that R2 and R3 are PIM neighbors. Let’s configure R1 as a rendezvous point:

R1(config)#interface loopback 0

R1(config-if)#ip pim sparse-dense-mode

R1(config)#ip pim send-rp-announce loopback 0 scope 10

I will use the loopback0 interface as the source IP address for the RP. Here you can see that R1 is a working RP:

R1#show ip pim rp mapping

PIM Group-to-RP Mappings

This system is an RP (Auto-RP)

Now let’s configure that mapping agent on R2:

R2(config)#interface loopback 0

R2(config-if)#ip pim sparse-dense-mode

R2(config)#ip pim send-rp-discovery loopback 0 scope 10

I will configure the loopback0 interface to use for the mapping agent. Let’s verify our work:

R2#show ip pim rp mapping

PIM Group-to-RP Mappings

This system is an RP-mapping agent (Loopback0)

Group(s) 224.0.0.0/4

RP 1.1.1.1 (?), v2v1

Info source: 1.1.1.1 (?), elected via Auto-RP

Uptime: 00:00:17, expires: 00:02:41

This is looking good. R2 is the mapping agent and it has discovered R1 as a rendezvous point. Let’s take a look at the multicast routing table:

R2#show ip mroute 224.0.1.39

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.39), 00:08:18/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Loopback0, Forward/Sparse-Dense, 00:05:54/00:00:00

Serial0/0, Forward/Sparse-Dense, 00:08:18/00:00:00

(1.1.1.1, 224.0.1.39), 00:08:18/00:02:42, flags: LT

Incoming interface: Serial0/0, RPF nbr 192.168.123.1

Outgoing interface list:

Loopback0, Forward/Sparse-Dense, 00:05:54/00:00:00

Above you can see that our mapping agent has received information from the RP because IP address 1.1.1.1 (loopback 0 interface of R1) is sending towards multicast group address 224.0.1.39. Let’s see if our mapping agent is advertising this to other routers:

R2#show ip mroute 224.0.1.40

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:08:11/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Loopback0, Forward/Sparse-Dense, 00:02:13/00:00:00

Serial0/0, Forward/Sparse-Dense, 00:08:11/00:00:00

(2.2.2.2, 224.0.1.40), 00:01:38/00:02:21, flags: LT

Incoming interface: Loopback0, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse-Dense, 00:01:38/00:00:00

This is looking good too. The mapping agent is sending the RP-to-Group information towards 224.0.1.40. It’s outgoing interface is the serial0/0 interface. Now let’s check the RP:

R1#show ip mroute 224.0.1.40

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:16:00/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse-Dense, 00:16:00/00:00:00

(2.2.2.2, 224.0.1.40), 00:08:48/00:02:09, flags: PLT

Incoming interface: Serial0/0, RPF nbr 192.168.123.2

Outgoing interface list: Null

Above you see that the RP receives information from the mapping agent but it’s not forwarding 224.0.1.40 out of its interfaces. Since R1 is the hub router it will not forward traffic out of the same interface to other spoke routers(split horizon rule). Let’s see what R3 thinks about all this:

R3#show ip mroute 224.0.1.40

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:17:36/00:02:58, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse-Dense, 00:17:36/00:00:00

As you can see R3 doesn’t receive anything from the 224.0.1.40 multicast group address. It doesn’t learn any RPs:

R3#show ip pim rp mapping

PIM Group-to-RP Mappings

Use show ip pim rp mapping and you’ll find out that it doesn’t know any rendezvous points. In order to fix this there are multiple solutions:

- Get rid of the point-to-multipoint interfaces and use sub-interfaces. This will allow the hub router to forward multicast to all spoke routers since we are using different interfaces to send and receive traffic.

- Move the mapping agent to a router above the hub router, make sure it’s not behind a spoke router.

- Create a tunnel between the spoke routers so that they can learn directly from each other.

I will use the tunnel solution to solve this problem. We will create a tunnel between R2 and R3 so that R3 can learn from the mapping agent directly. Here’s what the configuration looks like:

R2(config)#interface tunnel 0

R2(config-if)#tunnel source loopback 0

R2(config-if)#tunnel destination 3.3.3.3

R2(config-if)#ip address 192.168.23.2 255.255.255.0

R2(config-if)#ip pim sparse-dense-modeR3(config)#interface tunnel 0

R3(config-if)#tunnel source loopback 0

R3(config-if)#tunnel destination 2.2.2.2

R3(config-if)#ip address 192.168.23.3 255.255.255.0

R3(config-if)#ip pim sparse-dense-mode

Don’t forget to enable PIM on the tunnel interfaces. Let’s take another look at R3 to see if it learns anything:

R3#show ip mroute 224.0.1.40

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:29:47/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Tunnel0, Forward/Sparse-Dense, 00:01:12/00:00:00

Serial0/0, Forward/Sparse-Dense, 00:29:47/00:00:00

(2.2.2.2, 224.0.1.40), 00:00:26/00:02:33, flags: L

Incoming interface: Serial0/0, RPF nbr 192.168.123.1

Outgoing interface list:

Tunnel0, Forward/Sparse-Dense, 00:00:26/00:00:00

Now we are learning but something is still not quite right. We learn this information through the tunnel interface but the RPF neighbor shows 192.168.123.1. Let’s enable a debug so I can show you something:

R3#debug ip mpacket

IP multicast packets debugging is on

IP(0): s=2.2.2.2 (Tunnel0) d=224.0.1.40 id=936, ttl=9, prot=17, len=48(48), not RPF interface

If you enable the debug, you’ll see the message above. It says that the tunnel0 interface is not the RPF interface.

Be aware that you might have to disable route caching or you won’t see any multicast debug information. Use the “no ip mroute-cache” command on the interface to disable it.

You can also spot RPF errors using the following command:

R3#show ip rpf 2.2.2.2

RPF information for ? (2.2.2.2)

RPF interface: Serial0/0

RPF neighbor: ? (192.168.123.1)

RPF route/mask: 2.2.2.2/32

RPF type: unicast (ospf 1)

RPF recursion count: 0

Doing distance-preferred lookups across tables

The debug and the show ip rpf command both show us that the RPF neighbor is 192.168.123.2. This has to be changed to the tunnel interface…we need to fix this RPF error:

R3(config)#ip mroute 2.2.2.2 255.255.255.255 tunnel 0

We will create a static multicast route for the mapping agent pointing to the tunnel interface to solve our RPF error.

Keep in mind that the RPF check applies to data and control plane traffic. I’m creating a static multicast route to solve the RPF error for the mapping agent since we learn about the RP through the tunnel interface. Multicast data packets will be sent over the serial link.

Let’s take a look at the multicast routing table again:

R3#show ip mroute 224.0.1.40

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:38:49/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Tunnel0, Forward/Sparse-Dense, 00:10:15/00:00:00

Serial0/0, Forward/Sparse-Dense, 00:38:49/00:00:00

(2.2.2.2, 224.0.1.40), 00:02:29/00:02:34, flags: LT

Incoming interface: Tunnel0, RPF nbr 192.168.23.2, Mroute

Outgoing interface list:

Serial0/0, Forward/Sparse-Dense, 00:00:44/00:00:00

Now it shows the tunnel0 interface as the RPF neighbor which is what we are looking for. Let’s do a little test to see if multicast is working now:

R3(config)#interface loopback 0

R3(config-if)#ip pim sparse-dense-mode

R3(config-if)#ip igmp join-group 239.1.1.1

I will join the 239.1.1.1 multicast group address on R3, now let’s generate some traffic:

R2#ping 239.1.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Reply to request 0 from 192.168.23.3, 4 ms

I’ll send some pings from R2 and you can see that R3 is responding…problem solved!

hostname R1

!

ip cef

!

ip multicast-routing

!

interface Loopback0

ip address 1.1.1.1 255.255.255.255

ip pim sparse-dense-mode

!

interface Serial0/0

ip address 192.168.123.1 255.255.255.0

ip pim sparse-dense-mode

!

ip pim send-rp-announce Loopback0 scope 10

!

endhostname R2

!

ip cef

!

ip multicast-routing

!

interface Loopback0

ip address 2.2.2.2 255.255.255.0

ip pim sparse-dense-mode

!

interface Tunnel0

ip address 192.168.23.2 255.255.255.0

ip pim sparse-dense-mode

tunnel source Loopback0

tunnel destination 3.3.3.3

!

interface Serial0/0

ip address 192.168.123.2 255.255.255.0

ip pim sparse-dense-mode

!

ip pim send-rp-discovery Loopback0 scope 10

!

endhostname R3

!

ip cef

!

ip multicast-routing

!

interface Loopback0

ip address 3.3.3.3 255.255.255.0

ip pim sparse-dense-mode

ip igmp join-group 239.1.1.1

!

interface Tunnel0

ip address 192.168.23.3 255.255.255.0

ip pim sparse-dense-mode

tunnel source Loopback0

tunnel destination 2.2.2.2

!

interface Serial0/0

ip address 192.168.123.3 255.255.255.0

ip pim sparse-dense-mode

no fair-queue

!

ip mroute 2.2.2.2 255.255.255.255 Tunnel0

!

end

No comments:

Post a Comment