Migrating applications to the cloud require planning and research. In this lesson, we’ll take a look at five steps to migrate your on-premises workload to the cloud.

Application discovery

Our first step is to discover, analyze, and categorize your on-premises applications. Not all applications are suitable to migrate to a cloud environment. Here are some items you need to consider: evolving-technologies-workflow-migration-1080p.mp4

- Specialized hardware requirements: does your application run on specific hardware? Cloud providers offer different CPU types, including x86 and ARM. Most cloud providers also offer GPUs.

- Operating system: Does your application run on an operating system that the cloud provider supports or can you make it work on another operating system?

- Legacy databases: does your application run on old database server software that a cloud provider might not support?

- Security: does the application have any security measures? Older applications might not get updates anymore which might be acceptable in an isolated on-premises situation but not in the cloud.

- Performance requirements: does your application have specific performance requirements? Can a cloud environment meet these requirements? Is your application sensitive to delay?

Application types

There are two kinds of applications. Ones that work in the cloud, and those that don’t. We call applications that don’t run in the cloud legacy applications. These applications were designed to run on traditional (virtual) servers. Let’s discuss the difference between the two application types.

Legacy applications

Most applications are legacy applications but with some modifications, we can make them work in the cloud. This is best explained with an example:

WordPress is a popular CMS. ~30% of the websites in 2018 run on WordPress. Even if you never worked with WordPress before or installed a web server, you can probably understand this example.

If you want to host WordPress yourself in the “traditional” way then we have a process that looks like this:

- Select a (virtual) server with a certain amount of CPU cores, memory, and storage.

- Install a Linux Distribution as the operating system.

- Install all required packages:

- Apache: our web server software.

- MySQL: the database server.

- Download the WordPress files and upload them to your server.

- Add the database name, username, and password to the wp-config.php file.

- Launch your website.

You can perform the above steps manually or use installation scripts to automate these steps.

When your website grows and attracts more visitors, you can scale up and add more CPU cores and/or increase the memory of the server. When your server dies, you have a problem. You can always install a new server and restore a backup.

With traditional servers, our servers are like pets. We manually install, upgrade, and repair them. They run for years so we treat them with love and care. Perhaps we even give them special hostnames.

Cloud applications

Cloud providers offer virtual servers so you can repeat the above steps on a cloud provider’s virtual server. Running your website on a virtual server from a cloud provider is the same as regular Virtual Private Server (VPS) hosting, we end up with a “pet”.

In the cloud, we start new servers on-demand when we need them and we destroy them when no longer needed. Servers and other resources are disposable so we treat them like cattle. We want to spin up servers quickly so there is no time to manually configure a new server. To achieve this, we need to create a blueprint and configuration we can use for all servers.

To create a blueprint, we use a configuration management tool to define “infrastructure as code”. We configure the infrastructure we need in JSON/YAML files. Examples are Amazon AWS CloudFormation or Google Cloud Deployment Manager. Terraform is a configuration management tool that supports multiple cloud providers. To install and configure the servers, you can use deployment tools like Chef, Puppet, Ansible, and Amazon AWS CodeDeploy. Another option is to use containers which we discuss in another lesson.

When it comes to cloud computing, we want to keep cattle, not pets. If you are interested in building cloud-compatible applications, then you should take a look at the Twelve-Factor App Methodology. It’s a baseline that offers best practices to build scalable (web) applications.

Let’s walk through an example of how we can run our legacy WordPress application in the cloud:

Infrastructure as code

We create one or more configuration files that define our infrastructure resources:

- Template for our web server(s).

- Template for our database: we use a SaaS solution like Amazon AWS RDS, Google Cloud, or Azure Database for MySQL for this.

- Template for a load balancer: if you have over one web server then you need a load balancer to distribute the incoming traffic.

- Template for autoscaling alarm: when the CPU load of our web server exceeds 50%, we want to start a second web server.

- Template for shared file storage: our web servers need shared access to certain files. I’ll explain this in a bit. You can use a SaaS solution like Amazon AWS Elastic File System (EFS), Amazon AWS S3, or Google Cloud Filestore for this.

Having your infrastructure as code makes it easy to create and replicate resources.

Version Control System (VCS)

You should store your configuration files in a Version Control System (VCS). The advantage of a VCS is that it keeps track of changes in your files and it’s easy to work on the same files with multiple people. Git is the most popular open-source VCS. Github is a popular website where you can create git repositories to save your files.

We also store our WordPress files in a git repository. whenever you make changes to your website, you add them to the git repository. This makes your application stateless.

Deployment tool

We configure a deployment tool to install required packages for Apache, clone the WordPress git repository and any other configuration files needed. When we launch a new web server, the deployment tool automatically installs and configures the new server.

Shared file storage

WordPress uses different files and folders. We have PHP and CSS files for the website and we store these in a git repository. When we start a new web server, we copy these files from the git repository to the local storage of the web server.

There are two problems though. Many legacy applications expect that they can create or modify files and folders.

If you want to update a WordPress plugin, the web server deletes the old plugin files and installs the new plugin files. We can work around this by using a local development web server we use to install a new plugin. When the plugin works, we add the new files to our git repository and build a new web server with our deployment tool.

The other problem is about file uploads. For example: when you upload an image to your WordPress website then the image is stored on the local storage of the web server. When we destroy the web server, the image is gone. To work around this, we redirect the WordPress images folder to shared file storage. You can do this on the operating system level with an NFS file share or with a WordPress plugin that uploads images to S3 directly.

Considerations

Besides figuring out if you can make your applications work in the cloud, here are some other items to consider:

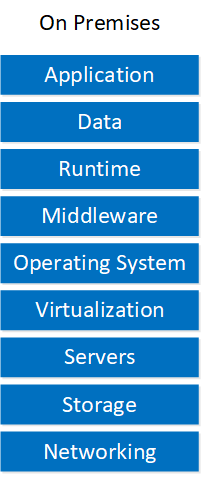

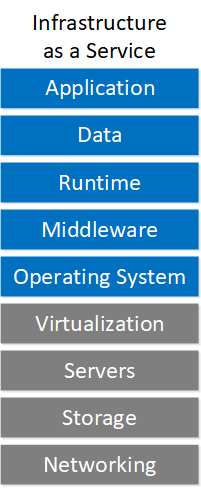

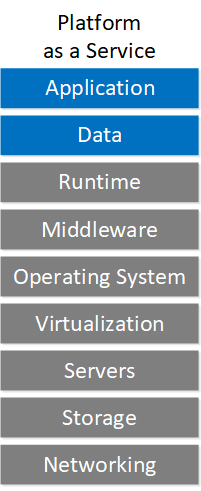

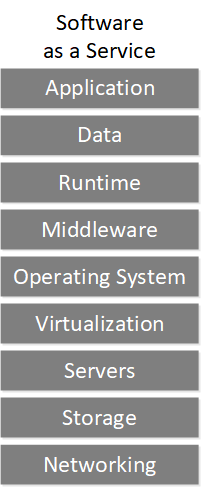

- Service model: which cloud resources (IaaS, PaaS, or SaaS) will you use?

- Cloud deployment type: which deployment type (public, private, community, or hybrid) suits your requirements?

- Availability: do you make your application available in one or multiple regions? How much redundancy do you need?

- Complexity: how difficult is it to migrate the application to the cloud?

- Security: are there any security considerations when you move the application to the cloud?

- Environment: how will you implement your production, development, and staging environments?

- Regulatory compliance: does this apply to your organization?

- Dependencies: does your application have any dependencies that you can’t move to the cloud?

- Business impact: how critical is the application? You might want to start with a less critical application when this is your first migration.

- Networking: how will you access the application? Is your WAN connection sufficient?

- Hardware dependencies: does your application have any hardware dependencies? Can you run the application in the cloud?

- Cost: don’t underestimate costs. In the cloud, you pay for all resources and there are quite some costs you might not think about beforehand. Data transfer rates, log file storage, etc.

Migration strategies

There are different migration strategies if you want to move your on-premises applications to the cloud. Gartner describes five migration strategies:

- Rehost: move the application from on-premises to a virtual server in the cloud. This is a quick way to migrate your application but you’ll miss one of the most important cloud characteristics, scalability.

- Refactor: move the application to a PaaS solution so you can re-use frameworks, programming languages, and container you currently use. An example is moving your Docker containers to Google’s Kubernetes Engine.

- Revise: some applications require changes before you can rehost or refactor them in the cloud. An example is a complex python application you need to break down and modify so you can run it on a serverless computing platform like Amazon AWS Lambda or Google App Engine.

- Rebuild: get rid of the existing application code and re-create all code to use the cloud provider’s resources. Rebuilding your application from scratch with the cloud in mind allows you to use all cloud provider features but there is also a risk of vendor lock-in.

- Replace: get rid of your application and replace it with a SaaS solution. You don’t have to invest time and money in developing your application to make it suitable for the cloud but migrating your data from your application to a SaaS application can also introduce problems.

Cloud providers also offer migration strategies:

Evaluation and testing

Once you have analyzed your applications and decided on a migration strategy, evaluate and test your options:

- Perform a pre-migration performance baseline of each application.

- Research and evaluate different cloud providers, their services, and SLAs.

- Evaluate automation and orchestration tools. Are you going to use tools from the cloud provider or tools that are not tied to a specific cloud provider?

- Evaluate monitoring and logging tools. Do you use a tool like Amazon AWS CloudWatch or an external solution like Datadog or Elasticsearch?

Once you have evaluated your options, you can test your migration.

Execute and manage migration

If this is your first migration to the cloud, take it easy and start with the “low hanging fruit”. Start with a non-critical easy to migrate application. During this migration, you can become familiar with the process, fine-tune it, and document the lessons you have learned.

After migration

Once the migration is complete, perform a post-migration performance baseline and compare the results with your pre-migration performance baseline. Cisco has a product called Cisco AppDynamics that does the work for you. AppDynamics collects application metrics, establishes a performance baseline and reports when performance deviates from your baseline.

Conclusion

You have now learned about the steps required to migrate your on-premises workload (applications) to the cloud:

- Application discovery: figure out the requirements of your applications and research if they are suitable to run in the cloud.

- Legacy applications are designed for traditional servers.

- Traditional servers are our “pets” or “snowflakes”. We install, update, and repair them mostly manually and care for them.

- You can make changes to some legacy applications to make them work in the cloud.

- In the cloud, we run resources “on-demand”, there is no time to manually install a server.

- We define our infrastructure resources in configuration files with a configuration management tool. We call this “infrastructure as code”.

- We use a VCS to store configuration files.

- We use deployment tools to install new servers automatically.

- Legacy applications are designed for traditional servers.

- Migration strategies: there are different strategies to migrate your applications to the cloud. The Gartner migration strategies are popular, cloud providers also offer different migration strategies.

- Evaluation and testing: once you have analyzed your applications and decided on a migration strategy, you need to evaluate all cloud providers and options.

- Execute and manage migration: start with a non-critical application that is easy to migrate so you can fine-tune and learn the process.

- After migration: compare your pre-migration performance baseline with your post-migration performance baseline.

I hope you enjoyed this lesson. If you have any questions, please leave a comment!