Please note that Shared Services term which is widely used in most of the documents is nothing but about Inter VRF/Tenant Communication.

I would like to mention, the level of documentation Cisco has done for ACI is commendable. You can find a detailed guide for all the bells and whistle in ACI on the Cisco official site.

Then, Why Am I Writing This?

Well, I thought there is a very critical design guideline around Inter VRF/Tenant route leaking methods in ACI, which I should highlight. So, just trying to do that…

Note: This is an advanced topic in ACI and I assume you have the working knowledge of ACI components like Tenant, EPG, BD, VRF, Contract etc. So, let’s begin…

Need for Inter VRF/Tenant Communication

The first thing we need to understand is in which cases Inter VRF/Tenant communication will be needed:

- Shared Services – Meaning, you have an EPG hosting common shared services in one VRF (shared service provider) and there can be single or multiple EPGs in another VRF (shared service consumers) using the shared services.

- Ad-Hoc – There can be specific two or more EPGs in separate VRFs and you might need them to communicate with each other as per requirement E.g. Multi-tier application setup with EPGs corresponding to every tier in separate VRFs.

Role of Contracts

As you must be aware of the fact that all the communication in ACI is governed by Contracts, even in case of inter vrf communication, we will need contracts between the EPGs. This is where the duality of the contract comes into the picture. So, there are two roles of contracts in case of inter vrf/tenant communication:

- Access control with the help of subjects and filters defined in the contracts

- Route Leaking between consumer and provider VRFs (I know many of us looked at contracts as ACLs, it’s more than that)

Contract Scope is an important attribute, it can be either of the two mentioned below:

- Scope: Tenant – Provider and consumer EPGs are in different VRFs but the same tenant.

- Scope: Globe – Provider and Consumer EPGS are in different VRFs as well as the different tenant.

Few Considerations

- In the case of inter tenant communication between two user tenants, the contract must be created in provider tenant and has to be exported to the consumer tenant. On the consumer side, the exported contract has to be attached to the consumer EPG as Consumed Contract Interface.

- Common tenant comes with the superpowers, in case you have one EPG in user tenant and another EPG in common tenant, create a contract in common tenant. That eliminates the overhead of exporting the contract, as contracts created in common tenant can be directly attached to EPGs in user tenant as provided or consumed contract.

- In the case of inter vrf communication within the same tenant, just the contract has to be provided and consumed into respective EPGs like we do normally and contract export is not required.

- Since the subnets between two VRFs will be exported to each other, they have to be unique and non-overlapping.

Design Approach

The design approach is selected based on whether there will be a single EPG or multiple EPGs (in Provider VRF) serving as the shared service provider to the EPGs in consumer VRF. Basically, there are two ways of doing the route leaking and policy enforcement between EPGs in separate VRFs.

1. Shared Services with Subnet defined under provider EPG (Usage: when there is only single EPG in provider VRF which will serve as a shared service provider)

2. BD-BD Shared Services (Usage: when there are multiple EPGs in provider VRF that can serve as the shared service providers)

Let's get into more details of both methods one by one:

1. Shared Services with Subnet defined under provider EPG

This is the preferred method to configure inter vrf/tenant communication as per ACI Best practices guide and works perfectly fine for shared services requirement (until you reach the Limitations section below). There are about tons of documents online that would serve as a step by step configuration guide for this method. I am taking an example of EPGs in separate tenant and VRFs to explain this method.

Procedure:

- Define the Provider side subnet at EPG level and mark it as Shared between VRFs (define the subnet at EPG level even if you have the same subnet defined at BD level in case it is being used by other EPGs also, as in application-centric deployment).

- Define the Consumer side subnet at BD or EPG level and mark it as Shared between VRFs.

- Create a contract at provider tenant and attach to the provider EPG as provided contract.

- Export that contract from provider tenant to consumer tenant, attach to the consumer EPG as consumed contract interface.

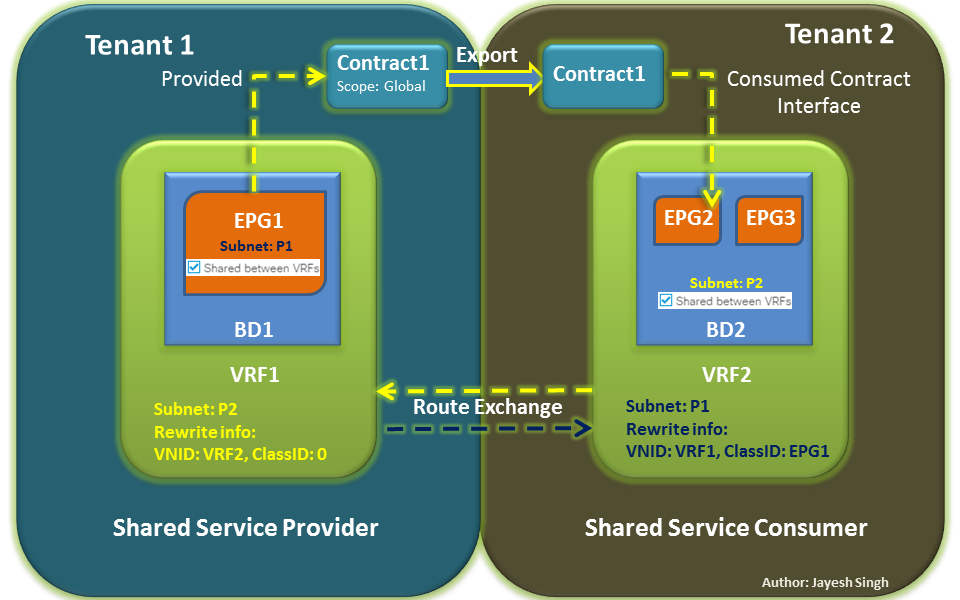

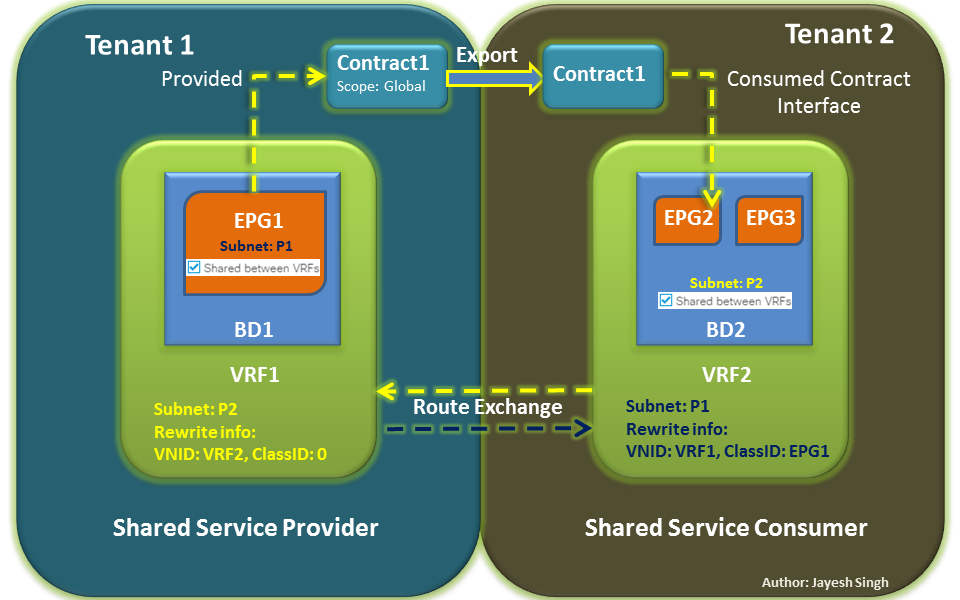

A logical representation of the setup with the provider and consumer EPGs in different Tenants is shown in the diagram below:

What Happens Under the Hood?

Provider Side:

- As soon as the contract is attached at both provider and consumer EPGs, consumer side prefix P2 is leaked into the routing table of provider side VRF (VRF1).

- Along with the routes, VNID rewrite information corresponding to the consumer side VRF (VRF2) and ClassID is also installed in VRF1. Please note that ClassID, which is nothing but PC Tag (EPG ID) is set as 0, meaning there is no consumer EPG information associated with the leaked prefix.

- Policy is also not programmed in provider VRF. So, on the provider side, it just forwards the packet based on routing information and policy is never applied on this side.

Consumer Side:

- Provider side prefix P1 defined at EPG level is installed into the routing table of consumer side VRF (VRF2).

- Along with the prefix, VNID rewrite information corresponding to provider side VRF (VRF1) and ClassID is also installed in VRF2. Please note that ClassID at consumer side is corresponding to EPG1 (provider EPG). This can also be considered as 1:1 static mapping of provider side subnet to the provider side EPG.

- Any incoming or outgoing traffic belonging to the provider subnet(P1) will be classified as EPG1.

- The contract is programmed in consumer VRF (VRF2). So, in this method, the policy is applied at the consumer side only and never on the provider side.

Limitations

In a nutshell, this method works like having 1:1 static mapping of provider side subnet to the provider side EPG in consumer VRF.

What if there are two provider EPGs using the same subnet as we normally have in application-centric deployment and both need to provide services to the consumer, if we follow the same method, what would be the ClassID (EPG ID) for provider’s leaked subnet installed at Consumer side?

Well, both providers will keep fighting (not literally) and at a time ClassID of only one provider EPG will be programmed in the consumer VRF, keeping another one inaccessible for the consumer (contract drop condition).

Interesting isn’t it? That’s when the second method A.K.A Poor brother of the first method comes into rescue…

2. BD-BD Shared Services

In my opinion, this method has been highly underrated due to its low profile appearance in shared services deployment guides. This method is also treated as the workaround for the limitation we saw in the first method.

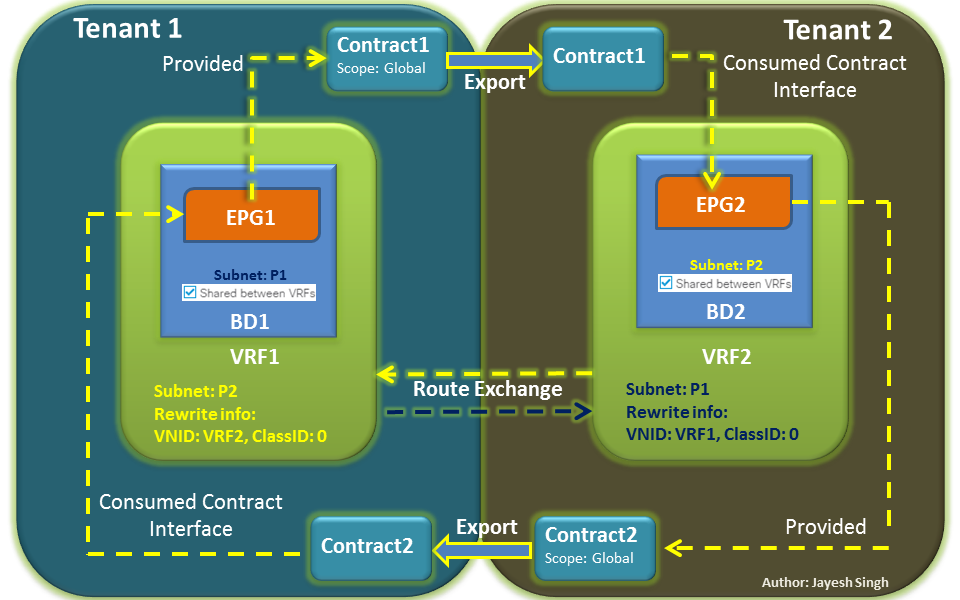

In BD-BD shared services method, EPGs in both the VRFs form provider as well as consumer relation with each other. It’s kind of 360-degree relationship making both of them as a shared service provider as well as the shared service consumer. Again, I am taking the example of EPGs in separate tenant and VRFs to explain this method.

Procedure:

- Define the subnets at BD level for both the EPGs in both VRF and mark them as Shared between VRFs.

- There is no need to define subnet at EPG level; route leaking is done between BDs.

- Create a contract in both tenants, provide and consume on both sides. It’s like configuring contracts as we did in the first method but twice and in both directions.

- What if you don’t need both EPGs to be the provider as well as the consumer? Well, still you need to form consumer as well as provider relation on both sides.

- So, in that case, you can make the second contract as a dummy contract, with filter mentioning a port on which there is no service running, or denying any unrequired port. It doesn’t need to enable communication, just required to form relationships. To save TCAM space, don’t check on apply in both directions.

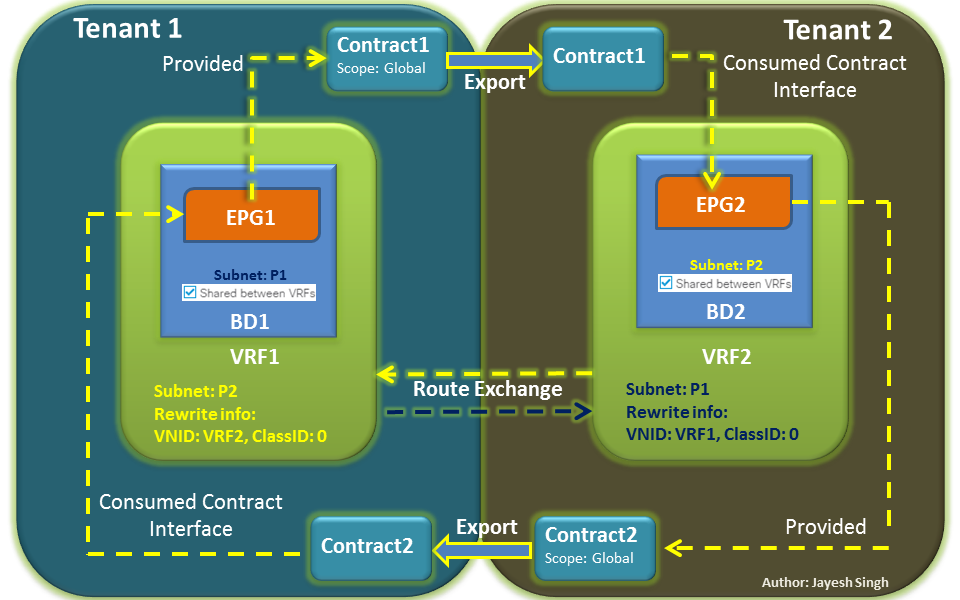

A logical representation of the setup with the provider and consumer EPGs in different Tenants is shown in the diagram below:

What Happens Under the Hood?

If you see the diagram, everything is identical on both sides. Routes are leaked between both VRFs along with VNID rewrite info; contracts (policies) are programmed in both VRFs.

One thing to notice is ClassID at both the sides are set as 0. This means, there is no EPG information associated with the leaked subnets. Hence, there is no static mapping of the subnet with EPG ID.

In this case what essentially happens is that, to classify the endpoint a lookup is done in actual endpoint table which contains exact /32 host IPs and EPG information as compared to the first method, where the endpoint is classified based on the provider subnet defined at EPG level.

So even if two EPGs are using the same subnet, there won’t be any impact as there is no static mapping of the subnet to EPG is involved, as the endpoint classification process is more granular.

Once the endpoint is classified, the policy is applied as per the contract between both the EPGs.

Limitations

BD-BD shared services method requires both sides to have a provider and consumer contracts, regardless of the fact whether it is needed for communication or not.

This eats up the TCAM space and should be chosen only when you have more than one EPG in the same VRF to be the shared service provider to the consumer.

Quickbits

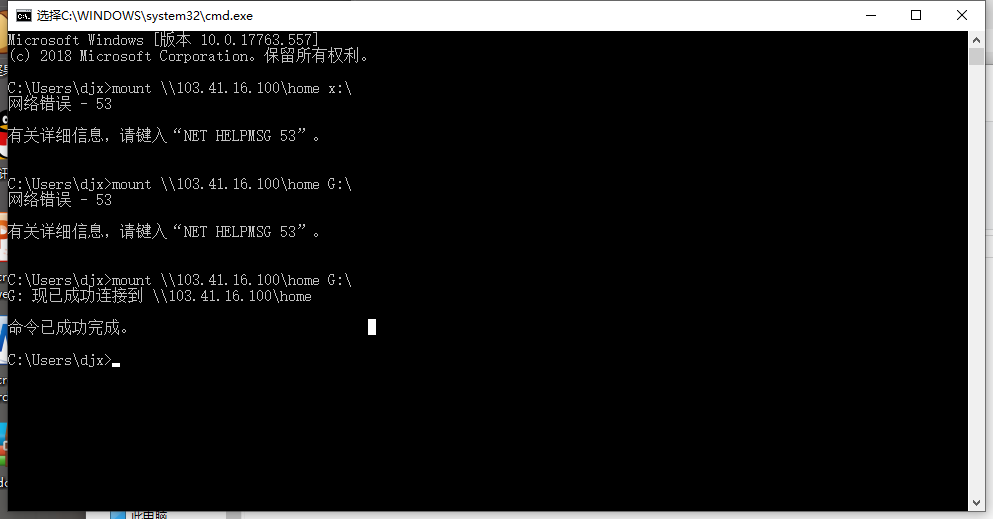

Verify VNID rewrite information and ClassID on the leaf switches using following commands:

Leaf-1#vsh

Leaf-1#show ip route vrf <tenant:vrf_name> <imported_IP_prefix> detail

Read the VRF crossing information section to get the details. ClassID will be shown in hex and when converted to decimal, gives us the pc tag of EPG.

Hope this post is helpful for all the ACI Experts out there. Your feedback will be highly appreciated!

Regards,

Jayesh

References:

https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/ACI_Best_Practices/b_ACI_Best_Practices.html

https://ciscolive.cisco.com/on-demand-library/?search=aci&search.event=ciscoliveus2018#/session/1509501653465001PRkT